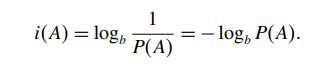

Although the idea of a quantitative measure of information has been around for a while, the person who pulled everything together into what is now called information theory was Claude Elwood Shannon. An electrical engineer at Bell Labs. Shannon defined a quantity called self-information. Suppose we have an event A, which is a set of outcomes of some random experiment. If P(A) is the probability that the event A will occur, then the self-information associated with A is given by

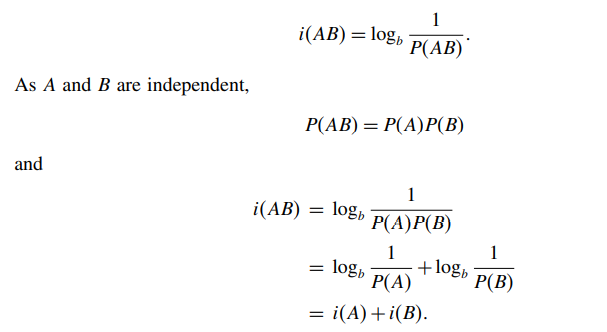

Another property of this mathematical definition of information that makes intuitive sense is that the information obtained from the occurrence of two independent events is the sum of the information obtained from the occurrence of the individual events. Suppose A and B are two independent events. The self-information associated with the occurrence of both event A and event B is

The unit of information depends on the base of the log. If we use log base 2, the unit is bits; if we use log base e, the unit is nats; and if we use log base 10, the unit is hartleys